Reshape Data

Now, we're going to use Stream's Eval Function to restructure the event, to make it easier to read and analyze. We'll get rid of _raw field, as it's not really needed after parsing. (All of the information from _raw is now in the event itself.) We'll also move the original Splunk metadata to fields under a new object, to make it clear to downstream consumers that those fields are Splunk-related.

Restructure Event with Eval

-

In the left Pipelines pane, click

Add Function. -

Search for

Eval. -

Click

Eval. -

Scroll down to display the new

EvalFunction, and click into itsFilterfield. -

Replace the

Filterfield's defaulttruevalue with:splunk_metrics.

(Be careful not to paste in leading or trailing characters.splunk_metricsis what we set as ourDestination FieldinParser, so this filter will returntruefor events which have asplunk_metricsfield.) -

For

Evaluate Fields, we're going to enter a number of options. The first row creates a new object. The following rows add fields to that object, and set these fields' values to the values of fields already in the event. The last row overridessourcetypewith the literal (single-quoted) value'splunk_metrics'. Click+ Add Fieldto add each row:Name Value Expression splunk_metadata {}splunk_metadata.host hostsplunk_metadata.index indexsplunk_metadata.source sourcesplunk_metadata.sourcetype sourcetypesourcetype 'splunk_metrics' -

Click

Save.

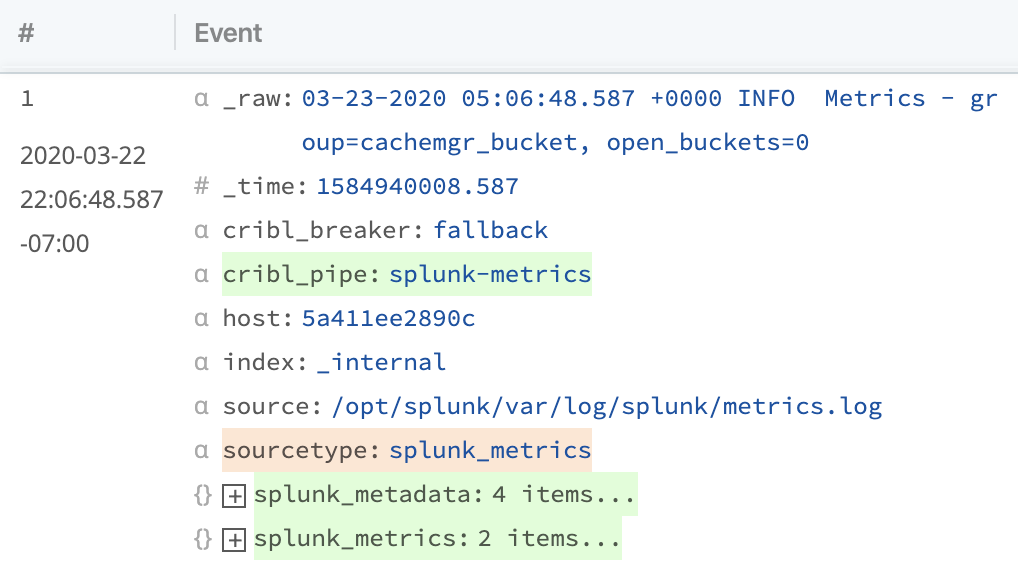

In the right Preview pane, you should now see parsed events that look like this:

We're pretty close, but we'd also like to get rid of a few fields we no longer need. Because host and sourcetype are used in our partitioning expression, we should keep those. But we no longer need _raw, index, or source.

Remove Fields

- In

Remove Fields, enter the string:_raw,index,source - Click

Saveto update the Pipeline

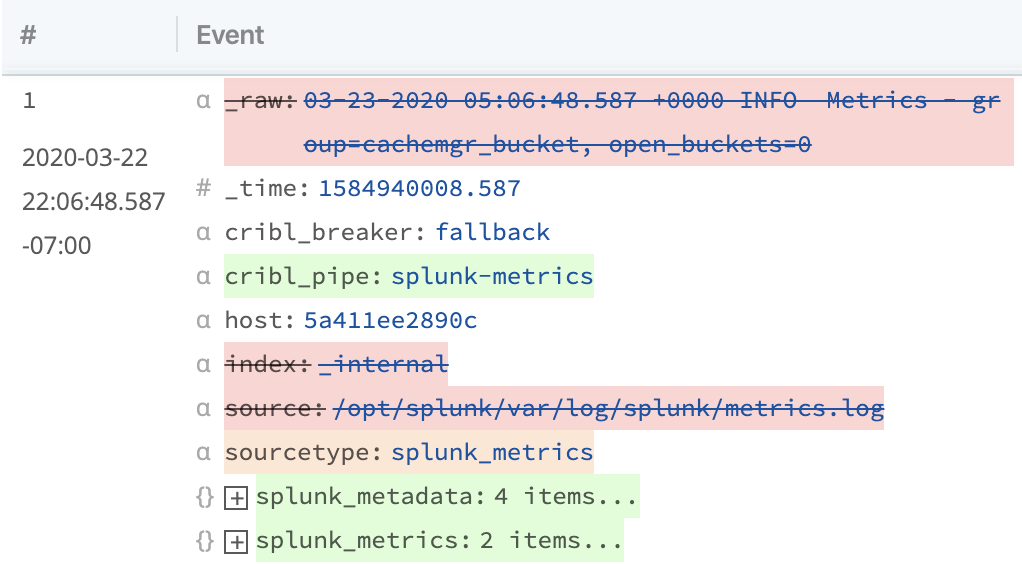

Now, you should see a set of fields being dropped like this:

We've now taken a regular log event, in semi-structured format, and converted it to a JSON document which can easily be analyzed in a number of systems. Next, let's enrich it with some additional context to make querying it easier.