Sampling

In this section, we're going to implement two different sampling methods on our data.

With sampling, events are discarded systematically according to the sample rate, allowing approximation of the original dataset. The primary advantage of sampling is that it defers aggregation to read time, so that all original queries are still possible on samples of the original. (The exceptions are needle-in-the-haystack searches potentially looking for individual rows that might have been discarded.)

Our first sampling Function is Sampling, based on rules. If a given filter condition evaluates true, this samples at a given static rate. The second, Dynamic Sampling, has the system determine the sample rate, to attempt to maintain relatively even distribution amongst the bins.

Configure Sampling

First, let's add the Sampling Function to our Pipeline. With Sampling, an event is run through a set of Sample rules. Sample rules, like Data Routes or Pipelines, are evaluated linearly. If a given event matches the Filter expression, it will be sampled at the Sampling Rate rate. We want to sample ChangeESN and RatePlanFeatureChange values of orderType, as these are the most frequent values, making them good candidates for volume reduction. We'll sample at a Sampling Rate of 5, meaning that we'll keep 1 out of every 5 events with those orderType values.

- Make sure

Manage > Processing > Pipelinesis selected in the top nav, with thebusiness_eventpipeline displayed. - Click

Sample Datain the right pane. - Click

Simplenext to thebe_big.logcapture. - Click

Add Function, search forSampling, and click it. - Scroll down and click into the new

SamplingFunction. - Set the

SamplingFunction'sFilterfield to:sourcetype=='business_event' - Under

Sampling Rules, clickAdd Rule. - In the

Sampling Rulestable'sFiltercolumn, paste the following expression:['ChangeESN','RatePlanFeatureChange'].includes(orderType) - Set the adjacent

Sampling Rateto5. - Click

Save.

This expression, ['ChangeESN','RatePlanFeatureChange'].includes(orderType), shows off the power of JavaScript in the product, but it warrants some explanation. We're declaring an array including two elements, and we're checking if the array includes the value of the orderType field from the event. This is a compact way of checking if a field in an event matches a list of potential options. It may look a bit backwards, but it works well.

Sampling Rate is an integer value, and it means we will select 1 out of every Sampling Rate events. In this case, we're selecting 1 out of 5.

In the right Preview pane, if you scroll through the capture, you should see a number of events being dropped. If you click the Graph icon () next to Select Fields, and look at the Number of Events column, you should see around 40 events dropped. In order to make it easier to scroll through the list, let's disable Show Dropped Events.

Show Dropped Events- In Preview, next to

Select Fields, click the gear icon () and toggleShow Dropped Eventsoff.

As you scroll through events, note that events with an orderType of ChangeESN or RatePlanFeatureChange will have a sampled field set to 5.

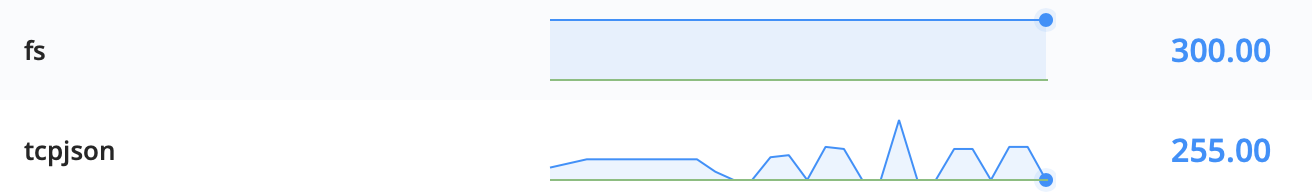

Next, look at the Monitoring > Data > Destinations tab. In the drop-down at the upper right, change the period from the default (previous 15min) to 5min. You should see that tcpjson is at about 60% of fs. Your graph probably looks something like this:

Now, let's move on to Dynamic Sampling.

Sampling Function- If you looked at the

Monitoringtab, navigate back to yourbusiness_eventpipeline. - Toggle the

SamplingFunction toOff - Click

Save.

Dynamic Sampling

With our Dynamic Sampling Function, you provide an expression to determine which keys to sample by, and the Function will try to even out event counts by floating the sample rate. This ensures that less-frequent messages still get a reasonable number of samples, while more-frequent messages will be sampled at a higher rate. The system determines the sample rate, based on the number of events per value of the key.

With the key being a JavaScript expression, you can set the sampling based on any combination of event values, including the values from lookups and other enrichments. In this case, we'll sample on the same field we used for the Sampling Function, orderType.

Dynamic Sampling Function- Click

Add Function, search forDynamic Sampling, and click it. - Scroll down and click into the new

Dynamic SamplingFunction. - In the new Function's

Filterfield, use:sourcetype=='business_event' - For

Sample Mode, chooseSquare Root. - For

Sample Group Key, enterorderType. - Since we have a small sample size: Under

Advanced Settings, setMinimum Eventsto 1. - Click

Save. - In the right

Sample Datapane, click the gear icon and re-enableShow Dropped Events.

You'll see dropped events more evenly scattered throughout the sample, and you'll see events of all different orderType values dropped. You should also see varying values of sampled, as some more-popular orderType values will get sampled higher, like NewActivation and RatePlanFeatureChange.

Back on the Monitoring > Overview tab, you should see a similar behavior as last time. We should be dropping approximately 40% of events.

Dynamic Sampling- Disable the

Dynamic SamplingFunction by togglingOntoOff. - Click

Save.

Next, we're going to cover aggregations.